Databricks has established itself as a leading platform in big data and advanced analytics, offering a unified environment for data engineering, data science, and machine learning. One of the recent innovations enhancing its functionality is the Databricks Asset Bundle (DAB). This feature is a game-changer for data teams, providing a structured way to manage and deploy resources. This blog will delve into what a Databricks Asset Bundle is, why it is needed, and the problems it solves.

What is a Databricks Asset Bundle?

A Databricks Asset Bundle (DAB) is a collection of configuration files that define various resources and assets within a Databricks workspace. These configuration files, typically written in JSON or YAML, encompass everything from clusters, jobs, and notebooks to libraries, secrets, and permissions. By bundling these assets, DAB provides a version-controlled, reproducible, and automated approach to managing the Databricks environment.

Why is Databricks Asset Bundle Required?

The necessity for Databricks Asset Bundle arises from the complexities and challenges inherent in managing large-scale data projects. Here are some key reasons why DAB is essential:

Simplified Environment Setup:

- Setting up a Databricks environment manually can be error-prone and time-consuming. DAB automates this process, ensuring that the environment is configured consistently every time.

Version Control:

- By storing configuration files in version control systems (like Git), teams can track changes, revert to previous versions, and collaborate more effectively.

Reproducibility:

- In data science and machine learning, reproducibility is crucial. DAB ensures that the environment in which experiments and models are developed can be replicated exactly, avoiding the “it works on my machine” problem.

Scalability:

- As organizations grow, managing multiple Databricks environments becomes challenging. DAB allows for the scalable deployment and management of resources across different environments (development, testing, production).

Enhanced Collaboration:

- With clearly defined configuration files, different team members can work on various aspects of the Databricks environment concurrently without stepping on each other’s toes.

Problems Solved by Databricks Asset Bundle

Databricks Asset Bundle addresses several pain points that data teams often encounter:

Manual Configuration Errors:

- Manually configuring clusters, jobs, and other resources can lead to inconsistencies and errors. DAB eliminates these by providing a standardized configuration approach.

Environment Drift:

- Over time, manual tweaks and changes can cause the environment to drift from its original configuration, leading to unpredictable behavior. DAB ensures that the environment can be reset to a known state.

Dependency Management:

- Managing dependencies across different notebooks, jobs, and libraries can be complex. DAB allows for the explicit definition of dependencies, ensuring that the right versions of libraries are used.

Security and Compliance:

- Managing secrets (such as API keys and passwords) securely is a major concern. DAB integrates with Databricks’ secret management system, ensuring that sensitive information is handled appropriately.

Operational Overhead:

- Automating the deployment of resources reduces the operational overhead on data engineering teams, allowing them to focus on more strategic tasks.

Key Components of a Databricks Asset Bundle

To understand how DAB works, let’s look at its key components:

Clusters:

Configuration files define cluster properties such as size, type, runtime version, and auto-scaling settings.

Jobs:

Jobs can be defined with their tasks, schedules, and dependencies, ensuring automated and repeatable execution.

Notebooks:

Notebooks are imported and organized within the workspace, maintaining the structure and code integrity.

Libraries:

Libraries required for the jobs and notebooks are specified, ensuring that all necessary dependencies are met.

Secrets:

Secret scopes and their corresponding secrets are managed, providing a secure way to store sensitive information.

Permissions:

Access controls for users and groups are defined, ensuring that the right people have the right access.

Data and DBFS Resources:

Configurations for data mounts, Delta tables, and DBFS resources are included, facilitating seamless data access.

MLflow Experiments and Models:

Definitions for tracking machine learning experiments and models using MLflow are provided.

Dashboards and SQL Queries:

Interactive dashboards and SQL analytics endpoints are configured for data visualization and querying.

Implementing Databricks Asset Bundle: A Step-by-Step Guide

Implementing a Databricks Asset Bundle involves several steps:

Define Configuration Files:

Create JSON or YAML files that describe your clusters, jobs, notebooks, libraries, secrets, and other assets.

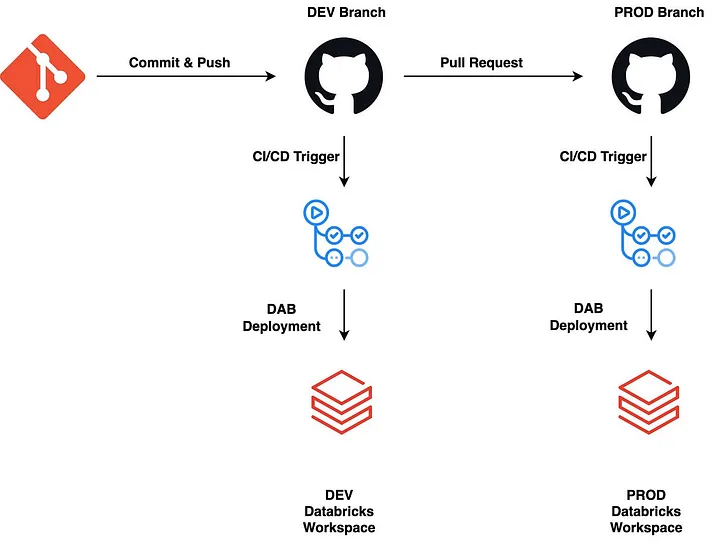

Version Control:

Store these configuration files in a version control system like Git to enable collaboration and tracking.

Deploy Using CLI or API:

Use the Databricks CLI or REST API to deploy these configurations to your Databricks workspace.

Automate with CI/CD:

Integrate the deployment process into your CI/CD pipeline to automate environment setup and updates.

Conclusion

The Databricks Asset Bundle is a powerful tool for managing and deploying resources within the Databricks environment. It addresses many of the challenges associated with manual configuration, ensuring consistency, reproducibility, and security. By adopting DAB, data teams can streamline their workflows, reduce operational overhead, and focus on driving insights and innovation from their data. As data projects become more complex, the importance of tools like Databricks Asset Bundle will only continue to grow, making it an essential part of the modern data toolkit.